One 60x60 sample is processed by a 2x2 group of processors. Given that Parallella can have 4x4 or 8x8 grids, this allows 4 or 16 groups respectively work independently either on different samples, or on the same sample rotated (but that's for another post).

Now by layers:

Layer 0: each processor has 30x30 chunk of the image.

Layer 1: 16 5x5 filters are applied + 2x2 max-polling: output 14x14x16.

Layer 2: 32 5x5x16 filters + 2x2 max-polling: output 6x6x32.

Layer 3: 48 3x3x32 filters are applied + 2x2 max-polling: output 3x3x48 with overlap. The size of the full matrix at this stage is 5x5x48.

Layer 4: 64 3x3x48 convolutions. In the paper, this is unshared convolution, so that at each spot the weights are different. I am still not sure whether I will do it unshared, or replace by a regular convolution. TECHNICALLY the amount co computation on each core is unchanged, but the amount of data that has to be copied back and forth between ARM and Epiphany is ginormous... So regular convolution for now.

Also, since this layer a) doesn't have max-polling, b) is followed by fully-connected layer and c) the input is already so small in spatial dimensions, the partitioning of the output is a bit different now. Instead of each core applying the same filter to its chunk of data, it will now apply DIFFERENT filters to the FULL matrix. So, in the output each core will have a 3x3x16 chunk of a full 3x3x64 matrix.

Given that the output of every layer has to be preserved for the back-propagation, we have:

(30x30x1 + 14x14x16 + 6x6x32 + 3x3x48 + 3x3x16)*(4 bytes) = 22.5 kb

Out of 32 :(

Hope I have enough memory to do backpropagation without swapping out stuff :(

[ 1 comment ] ( 1874 views ) | permalink |

Within the last week or so

- downloaded the datasets that were used in 300 Faces in the Wild.

- implemented basic functions like image pre-processing, convolution + maxpooling etc.

Still not sure what to do with unshared convolution, plus there are some other questions that were not specified in the paper.

Will put together more about use of shared memory when I have time.

[ 2 comments ] ( 324 views ) | permalink |

So the board works fine, and apparently worked fine all the way.

Except for the USB bug. Apparently to get USB working I'll need to vandalize a USB cable.

Might try that some time, but for now I've connected it to my home router and SSH'ed into it. Examples run fine, now trying simple stuff of my own...

[ add comment ] ( 17220 views ) | permalink |

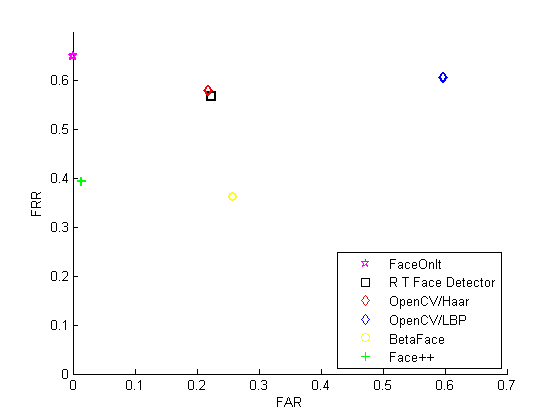

Last week was quite busy with midterms (plus there was nobody in the lab, and I don't have keys :) so I wasn't able to start fiddling with the board. Instead, since there is an obvious winner among the algorithms, I decided to start looking at it.

The basis is described here: http://research.faceplusplus.com/face-r ... -iccv2013/. In short, it uses the cascade of deep convolutional neural networks. Since I'm not doing face recognition and don't need to know the exact shape of an eyebrow, I will only need the first two, at most three.

A few papers that might be useful:

Interesting paper on parallelizing the ConvNet

Not ConvNet, but a lot of implementation details on GPU.

On training ConvNets

Another implementation of a Neural Net on GPU

[ add comment ] ( 706 views ) | permalink |

The image set can be downloaded from here.

Full test results as Excel file.

[ add comment ] ( 463 views ) | permalink |